hpurmann

Managing secrets the GitOps way

This post is a follow-up on the Kubernetes and GitOps post from previous week.

Secrets are an integral part of any application. They need credentials to access a database or authenticate against other external applications. To store secrets, it is advisable to use a secret storage system. Nowadays, there are several options out there, many of which are open source.

One of those options is Vault by HashiCorp. It is probably the most popular one. Vault stores secrets in a tree-like structure, allowing fine-grained policy definitions. It supports authentication over GitHub and there are plugins for Jenkins available so that Jobs can retrieve secrets.

kubernetes-vault

When we started using Kubernetes, we searched for a solution to populate our secrets from Vault. First, we tried Boostport’s kubernetes-vault controller. It watches for new pods with a certain annotation and expects them to have a special init container defined. As we rolled this out to more deployments, we realized how cumbersome the approach actually is:

- Each deployment needs an additional init container, a volume and volume mount. There is a lot of copy-pasting involved.

- It creates a runtime dependency on Vault. Because our Vault deployment was not highly available it created a single point of failure.

Sealed secrets

At that time we read about GitOps and Weaveworks’ recommendation to use Sealed Secrets.

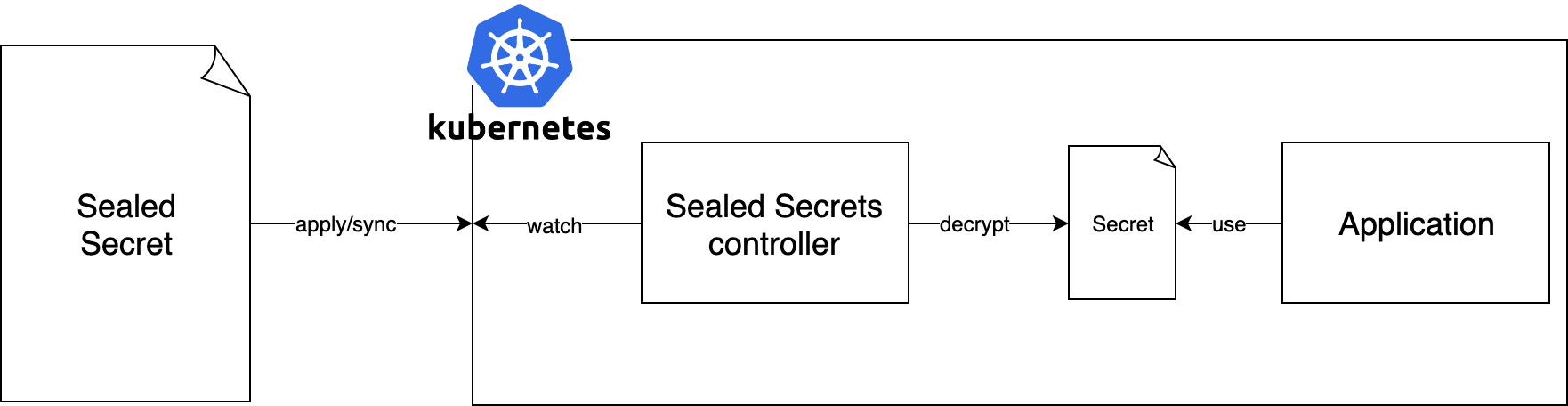

The idea is pretty simple: You locally encrypt your secrets with a public key and only the cluster has the private key to decrypt them.

A custom resource definition SealedSecret is introduced. The sealed secrets controller lives in its own namespace and watches for those definitions.

It transparently decrypts the SealedSecret and creates a Secret with the same name and namespace.

This approach allows storing the encrypted secrets in version control. It is similar to how Travis CI solves adding secret environment variables. Both aforementioned problems are solved by it:

- Each deployment can expect secret resources to be there and reference them by name.

- Deployments are independent of the availability of Vault.

But how do we transform vault secret values into sealed secrets? Let’s look at that next.

Sealed secrets workflow

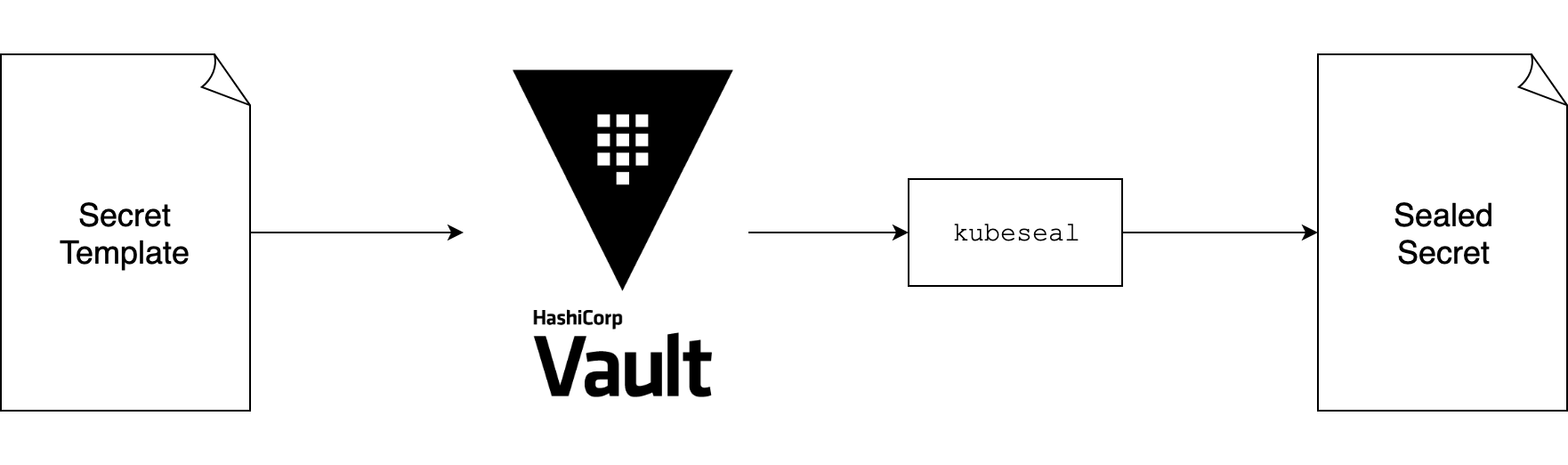

Inspired from Helm and its usage of Go templates, my colleague Thomas Rucker wrote a Go template function to fetch secrets from Vault, vault-template.

Using this, we are able to write secret template files such as this:

apiVersion: v1

kind: Secret

metadata:

name: mysecret

namespace: production

type: Opaque

data:

config.yml: |

database:

admin_username: {{ vault "secret/database" "username" }}

admin_password: {{ vault "secret/database" "password" }}

The vault template function will fetch the secret in secret/database and get its fields username and password respectively.

Sealing this secret is just a matter of invoking kubeseal, the CLI for sealed secrets.

To make this process easier for the development teams we wrote a small wrapper which also preserves existing folder structures. The first draft was a bash script which we later transformed into a helm plugin.

Learnings

Because only the cluster has the private key to unseal, changes made to the sealed representation are not reviewable. Moreover, thanks to a session key used during the encryption process, the sealed result is different on each invocation. This further complicates code reviews. In my team, we found agreement to avoid unnecessary re-seals.

On a side note, Mozilla’s SOPS solves this by allowing multiple key pairs where one of them is readable from the developers machine. This approach seems worth looking at.

Another issue is related to the rotation of secrets: Because there is no direct connection from secret consumers to the secret store, automated secret rotation is not easily achievable.

Conclusion

Sealed secrets allow us to follow through on the GitOps approach and keep all of our configuration in a git repository. Even though the future of this project is not clear right now I certainly hope it continues to grow and be maintained.